How to migrate mainframe-era data to modern, cloud-native architectures without losing structure, meaning, or speed.

Introduction to COBOL Modernization & Cloud Migration

The world still runs on COBOL and that’s both impressive and terrifying.

Across banks, insurers, airlines, and governments, decades-old COBOL systems process trillions of dollars in transactions every day. They’re stable, reliable, and deeply entrenched. But they’re also rigid, expensive, and incompatible with today’s cloud-native world, especially as organizations increasingly seek digital transformation services USA, cloud infrastructure services USA, and enterprise transformation consulting.

The biggest modernization challenge isn’t rewriting code, it’s migrating and remapping data models that have evolved silently over 30 years. This is where COBOL modernization services, data model mapping, and legacy system modernization become essential.

When done right, data mapping turns legacy chaos into clean, flexible, cloud-ready systems. When done wrong, it can derail entire transformation projects.

In this guide, Flynaut explains how to move from COBOL to cloud-native architectures safely, by focusing on the most overlooked aspect of modernization: data model transformation, a core pillar of our custom software development company and enterprise app development services expertise.

Why Data Models Matter in Modernization & Legacy System Transformation

Data is the lifeblood of every enterprise system. Yet, in legacy COBOL applications, data is often trapped in:

- Flat files and hierarchical databases (like IMS or VSAM).

- Hard-coded data structures defined in program logic.

- Proprietary record layouts with little documentation.

When organizations modernize, they often focus on code refactoring but neglect data architecture, a costly mistake, especially when pursuing COBOL to cloud migration or cloud data architecture consulting.

A successful modernization doesn’t just translate code, it reimagines data models to fit a cloud-native world where data is:

- Accessible via APIs.

- Scalable across distributed systems.

- Governed for security and compliance.

- Structured for analytics, AI, and automation.

This aligns directly with Flynaut’s capabilities in AI integration services, modern data engineering services, and ETL modernization services.

The Challenge: COBOL’s Hidden Data Complexity in Legacy Systems

COBOL data definitions (COPYBOOKs, PIC clauses, and record hierarchies) are notoriously dense. A single line of COBOL code can pack multiple business rules and numeric encodings, revealing why specialized legacy data transformation and data integration consulting are essential.

Example:

05 CUSTOMER-RECORD.

10 CUST-ID PIC 9(5).

10 CUST-NAME PIC X(30).

10 CUST-BALANCE PIC S9(7)V99 COMP-3

This simple structure hides multiple challenges:

- Numeric fields (COMP-3) require decoding.

- Business rules are embedded in field naming conventions.

- Relationships between records are implicit, not defined in metadata.

When modernizing, these records must be mapped to relational or document-based schemas (e.g., PostgreSQL, MongoDB), without losing logic or integrity.

The Right Way: Data Model Mapping Strategy for Modern Cloud Architectures

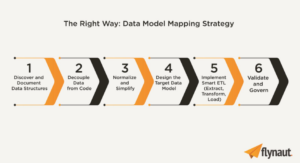

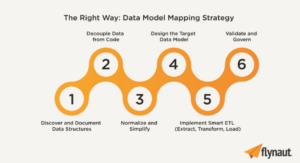

Modernization success hinges on a clear data mapping strategy. Flynaut’s proven framework uses six deliberate steps to migrate COBOL-era data models safely to the cloud, a process heavily supported by our cloud migration consulting, process automation services, and enterprise transformation consulting practices.

Step 1: Discover and Document Data Structures for COBOL Modernization

Before you migrate anything, you need a complete inventory of all data elements, structures, and dependencies.

Use automation and AI-powered discovery tools to:

- Parse COBOL COPYBOOKs to extract schema definitions.

- Identify hidden dependencies between programs and datasets.

- Generate entity-relationship (ER) diagrams automatically.

Goal: Build a Data Model Blueprint that supports future AI modernization, data modernization, and enterprise-wide scalability.

Step 2: Decouple Data from Code for Scalable Cloud Migration

Decoupling creates reusable, API-friendly structures, a requirement for modern enterprise app development services and cloud-native architectures.

- Extract and externalize data definitions into metadata repositories.

- Replace hard-coded dependencies with API-driven access layers.

- Introduce a canonical data model (CDM) that represents shared business entities (e.g., Customer, Policy, Transaction).

Introducing a Canonical Data Model ensures consistency across digital ecosystems, aligning with AI-powered data mapping and enterprise data transformation strategies.

Step 3: Normalize and Simplify Legacy Data Models

COBOL systems often store redundant and inconsistent data across multiple files. Flynaut uses normalization and deduplication techniques to:

- Identify overlapping entities.

- Eliminate redundant fields.

- Standardize naming conventions and formats.

Normalization eliminates redundancy and prepares data for SQL, NoSQL, or streaming databases, essential for industry 4.0 solutions, IoT development services USA, and scalable digital transformation services USA.

Step 4: Design the Target Data Model for Cloud-Native Architecture

Your target data model should reflect the needs of modern, distributed, cloud-native applications.

Depending on business goals, Flynaut helps you choose:

| Target Model | When to Use It | Example |

| Relational (SQL) | Transaction-heavy systems needing consistency | PostgreSQL, Amazon Aurora |

| Document-based (NoSQL) | Semi-structured, fast-evolving data | MongoDB, DynamoDB |

| Event-driven / Streaming | Real-time analytics and integration | Kafka, AWS Kinesis |

| Data Lake / Warehouse | Historical data and AI training | Snowflake, BigQuery |

This is foundational for companies seeking cloud infrastructure services USA, product engineering services, or startup consulting services USA.

Step 5: Implement Smart ETL (Extract, Transform, Load) for Legacy Data Transformation

Data migration isn’t just copy-paste, it’s a reengineering process.

Flynaut uses intelligent ETL pipelines to migrate VSAM, IMS, and flat-file data into modern cloud systems, ensuring clean, validated transformations aligned with ETL modernization services and legacy to cloud modernization:

- Extract mainframe data without disrupting operations.

- Transform data formats, encodings, and numeric types.

- Validate against business rules (e.g., check digit logic, field ranges).

- Load data securely into the target cloud environment.

Key principle: Modernization should enhance data quality, not merely replicate old issues on new infrastructure.

Step 6: Validate and Govern Data for Compliance & Modern Data Quality Standards

Once migrated, data must be validated for completeness, integrity, and usability.

Flynaut integrates automated data validation frameworks that:

- Compare source vs. target record counts.

- Verify referential integrity.

- Audit field-level transformations.

- Tag data for compliance (GDPR, HIPAA, SOX).

We also implement data governance policies with cataloging, lineage tracking, and access controls, ensuring every data object is traceable and secure.

The Role of GenAI in Automated Data Mapping & Modernization

Modern data mapping isn’t entirely manual anymore. Generative AI plays a transformative role in accelerating and validating migrations.

GenAI applications include:

- Automated schema mapping: Suggests field correspondences between COBOL and cloud schemas.

- Business rule inference: Reads code and documentation to extract transformation logic.

- Data quality enhancement: Flags anomalies and suggests normalization rules.

- Documentation generation: Converts complex COBOL definitions into human-readable summaries.

Flynaut’s modernization team uses GenAI-driven tools to reduce mapping effort by up to 60% while improving accuracy.

Common Pitfalls to Avoid in COBOL to Cloud Migration

- Assuming COBOL = SQL

Mainframe data models aren’t 1:1 with relational databases. Without normalization and mapping, you risk data duplication and loss of meaning.

- Skipping Metadata Extraction

Ignoring implicit relationships in COBOL code can lead to broken dependencies post-migration.

- Migrating Dirty Data

Garbage in, garbage out. Legacy systems often contain decades of uncleaned records, clean before you migrate.

- Underestimating Business Logic Embedded in Data

COBOL often hides business rules inside data layouts (e.g., negative balances as coded values). Missing these leads to broken logic.

- Overlooking Governance

Post-migration, data governance is non-negotiable. Without it, compliance risk skyrockets.

These mistakes often derail cloud migration, data model mapping, and digital transformation programs.

The Flynaut Advantage: Modernizing COBOL Data with Precision & AI Integration

Flynaut brings deep modernization experience across banking, insurance, healthcare, and logistics, where COBOL systems still dominate.

Our Data Modernization Framework includes:

- Discovery: Automated COBOL parsing and schema visualization.

- Design: Modern data model design aligned with your business use cases.

- Transformation: AI-assisted ETL pipelines for clean, structured migration.

- Validation: Continuous integrity and performance monitoring.

- Optimization: Cloud cost and performance tuning for long-term ROI.

Why Enterprises Choose Flynaut for COBOL Modernization & Cloud Migration:

- Proven success in COBOL-to-cloud transformations.

- Certified experts in AWS, Azure, and Google Cloud.

- GenAI-driven data mapping automation.

- Compliance-focused governance frameworks.

- Measurable ROI: faster, safer, and more cost-effective than traditional migration methods.

Flynaut helps organizations move from legacy data bottlenecks to cloud-native agility, without losing business-critical logic or data fidelity.

Case Study: Banking Data Modernization with COBOL to Cloud Migration

A top U.S. regional bank relied on COBOL-based systems for customer accounts and transactions. The challenge: migrating 20 million records from VSAM to AWS Aurora without downtime.

Flynaut’s approach:

- Parsed 500+ COBOL COPYBOOKs automatically.

- Created a canonical entity model for Customer, Account, and Transaction data.

- Implemented smart ETL pipelines via AWS Glue and S3 staging.

- Validated 99.98% data integrity in post-migration QA.

Results:

- 42% faster query performance.

- $800K in annual mainframe cost savings.

- Real-time API access to transaction data for mobile apps.

This demonstrates our leadership in COBOL modernization services, enterprise app development services, and data integration consulting.

Best Practices for COBOL-to-Cloud Data Mapping & Legacy Data Transformation

- Start with Metadata Extraction – It’s your blueprint for success.

- Adopt a Canonical Data Model Early – Avoid fragmentation across teams.

- Automate Wherever Possible – Use AI for schema matching and validation.

- Prioritize Data Quality – Clean, normalize, and standardize before loading.

- Govern Rigorously – Document every transformation and lineage path.

- Iterate Incrementally – Migrate in batches; validate continuously.

These reflect our industry-leading approach to legacy system modernization and data transformation.

Conclusion: Unlocking Cloud-Native Agility Through COBOL Modernization

Moving from COBOL to cloud-native isn’t just about code, it’s about data intelligence. Done wrong, it can break decades of business continuity. Done right, it unlocks agility, scalability, and innovation.

The secret lies in data mapping, understanding how information flows, relates, and transforms across systems.

With Flynaut, modernization becomes a precise, governed, and ROI-driven journey. We don’t just migrate your data, we reimagine it for a future where information moves as fast as your business.

Transform legacy COBOL data into a cloud-native powerhouse.

Partner with Flynaut, the #1 data modernization and app development company in the U.S., to ensure accuracy, compliance, and speed, every step of the way.